Alumnus Zeming Chen Helps Develop Worldwide AI Machine Meditron

Alumnus Zeming Chen — a computer science & mathematics double major — helped develop Meditron, the world’s best performing open-source Large Language and Multimodal Model that is tailored to the medical field and designed to assist with clinical decision making and diagnosis.

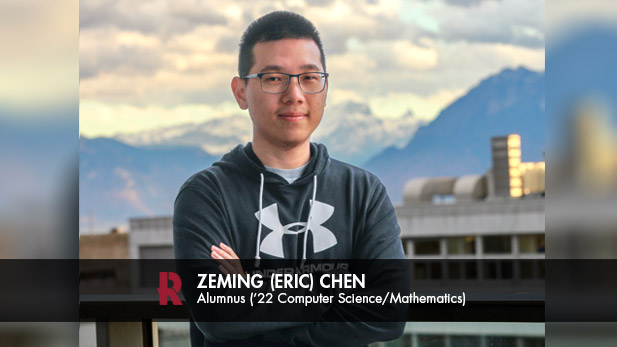

Two years ago, Zeming (Eric) Chen graduated from Rose-Hulman ready to embark on a summer internship with the Institute of Artificial Intelligence and begin his doctoral program at the Swiss Federal Institute of Technology in Lausanne, Switzerland. In just that short time, Chen — a computer science and mathematics double major — has already made a significant impact in the world of AI. He helped develop Meditron, the world’s best performing open-source Large Language and Multimodal Model that is tailored to the medical field and designed to assist with clinical decision making and diagnosis.

A Large Language Model (LLM) is a deep learning algorithm that takes massive amounts of text to learn billions of mathematical relationships between words (also known as parameters) to create reliable information and content. Most people are familiar with LLMs as the algorithmic basis for models like ChatGPT and Google’s Gemini (formerly Bard). The difference between the two is that ChatGPT is considered a generalist model because it can find and produce information about thousands of different subjects. Meditron is trained specifically on high-quality medical data sources, including peer-reviewed medical literature and a diverse set of internationally recognized clinical practice guidelines from multiple countries, regions, hospitals and organizations.

Meditron was born of an idea at the Natural Language Processing Laboratory in Lausanne where Chen is a doctoral assistant.

“Our school is building a new supercomputer for AI training,” said Chen. “We wanted to do a beta test to make sure the new supercomputer can support the large-scale workflow it’s designed to do. … I proposed training a medical language model that eventually became Meditron.”

Chen was named project lead for Meditron, with several other labs around the world joining the effort.

“With projects like that, you need specialties from many domains,” said Chen. “We had people who focused on the system, distributed training, data storage, and we have people like us who specialize on AI and machine learning. We also had a lot of domain experts from the medical field and hospitals help us focus on the clinical practice aspect.”

Almost immediately, Meditron was being used across the world, including at hospitals and international regions that do not have access to trained medical diagnosticians and infrastructure. Doctors and medical professionals use Meditron to help diagnose and understand what a patient is experiencing. For example, a clinician can input into Meditron patient symptoms and Meditron will come up with a list of possible diagnoses. This is especially impactful in low-resource regions that rely mainly on community workers. A panel of 16 international physicians evaluated Meditron on a set of adversarial medical questions and concluded that Meditron’s level of expertise is equivalent to or higher than that of a medical resident with up to five years of experience.

In other instances, the University Hospital of Lausanne (known as CHUV) is training Meditron for its emergency care department and patient recording processing. The International Committee of the Red Cross (ICRC) is planning to use the model to support low resource regions across the world that do not have the resources for this type of computer. Academically, medical schools, including Yale, want to improve Meditron for use in areas like cancer detection and drug discovery. Yale Medicine has several labs working on projects built upon Meditron and Chen has received email inquiries for technical help from the medical school.

Meditron has received attention from AI experts with posts on social media channels, including Clément Delangue, CEO of HuggingFace; Emad Mostaque, CEO of Stability AI; and Yann LeCun, recipient of the Turing Award, which is widely regarded as the computer science equivalent of the Nobel Prize. Chen was invited to give a presentation about Meditron at the ICRC’s conference at United Nations headquarters in Geneva in December 2023. Clinical workers from across the globe attended and learned how Meditron can help in remote regions.

Meditron continues to evolve, and more features are being added to the model. Chen is currently working to get Meditron “eyes” to see.

“We are developing a vision system based on Meditron so it can process radio imaging pathology reports so the model can look at images and make diagnoses, analysis and generate reports,” said Chen.

Meditron is not the only AI endeavor that Chen has been working on as a PhD candidate at the Natural Language Processing Lab. His research focus is on developing an LLM that can do complex tasks only humans can currently do, both in the medical and mathematics domains. Chen is working on developing a learning algorithm to help these models learn more robustly, as well as handle long context document level reasoning.

“Right now, all the AI models need large amounts of training data to be able to perform a task,” said Chen. “My research is focused on how to make a model learn quicker with fewer examples. With a human, you only need two or three examples to adapt to a new task. My goal is to have the model do that as well.”

As part of his work in the Natural Language Processing Lab, Chen is also participating in the newly formed Swiss AI Initiative. His lab is focusing on developing a multi-lingual language model — AI that will perform in more than English and two sovereign languages.