They are not unique because many different points in a scene may share an RGB value

They are not invariant because change from one image to another may change the RGB value of the same point.

Let’s investigate the behavior of the Harris corner detector on the three image patches shown below. [222222000][022022000][222022002] Compute the structure tensor for each of the above patches. I have it on good authority that these images are noise-free, so we can safely skip the Sobel filter and compute gradients using 3x1 and 1x3 centered finite difference filters and repeat padding.

np.linalg.eigvals, or use the formula described here), compute the smallest eigenvalue of each of the structure tensors you computed in the prior problem.0, 12, 4

def harris_score(img):

""" Returns the smaller eigenvalue of the structure tensor for each pixel in img.

Pre: img is grayscale, float, [0,1]. """

dx = convolve(img, sobel_x)

dy = convolve(img, sobel_y)

A = separable_filter(dx * dx, gauss1d5)

B = separable_filter(dx * dy, gauss1d5)

C = separable_filter(dy * dy, gauss1d5)

det = A*C - B*B

tr = A+C

m = tr / 2

p = det

sqrtm2mp = np.sqrt(m**2 - p)

eig1 = m - sqrtm2mp

eig2 = m + sqrtm2mp

return np.minimum(eig1, eig2)

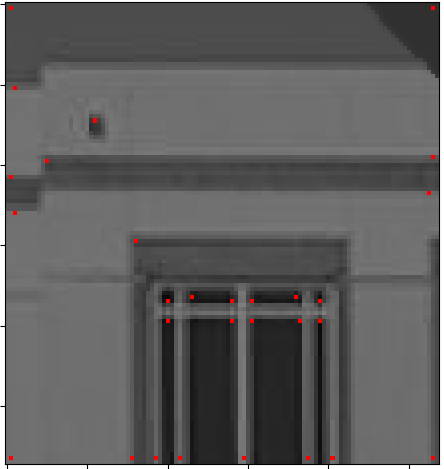

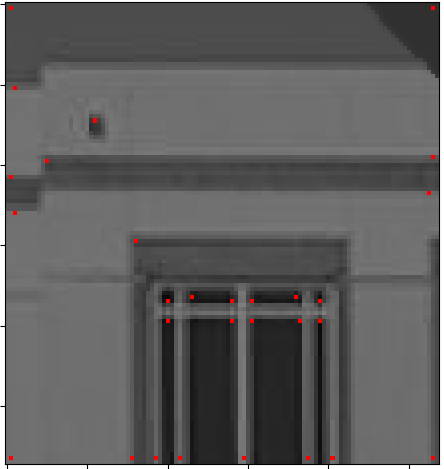

Some of these points would be better characterized as edge patches, rather than corner patches. Why did our code pick them up, and what would we need to change in order to get only things that really do look like corners in the image?

Our convolution does zero padding, so there’s an implicit edge around the border where the intensity drops to zero. So horizontal edges coming out of that look like corners; also the corners of the image itself look like corners. We’d need to do repeat or reflect padding to prevent this.

Poor threshold choice is one reason, but the points around the edges will get picked up even with a high threshold.