CSSE 461 - Computer Vision

This assignment covers feature matching, geometric image transformations, and image warping.

Your homework solutions must be typewritten. Upload a single PDF to Gradescope. If you write a little code to answer a question, include the code in your answer.

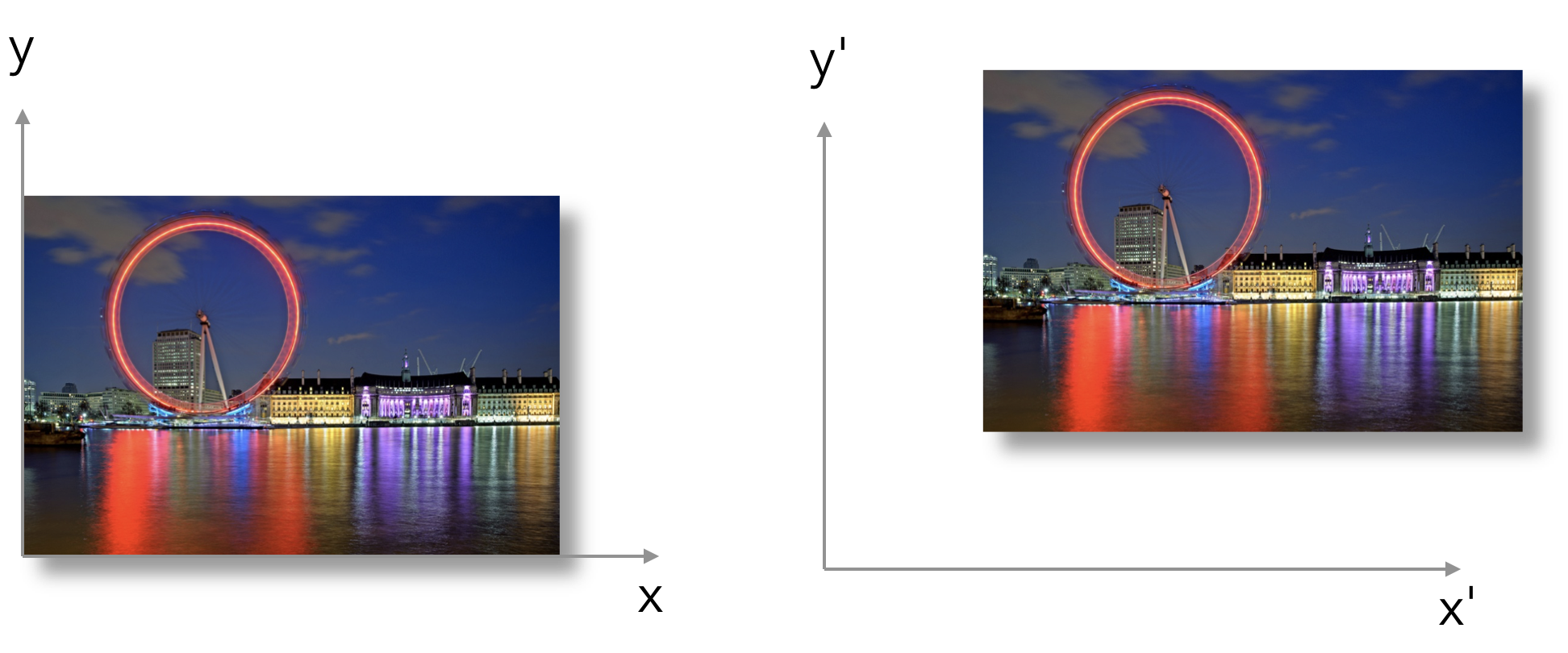

Problems 1-5 use traditional math-class \((x,y)\) coordinate conventions instead of the odd pixel-coordinates that we’ve been using in this course. The origin is in the bottom-left corner of each image, the \(x\)-axis points right, and the \(y\)-axis points up. This is supposed to make the problems more intuitive. The transformations that you are asked to find should map \((x,y)\) coordinates in the left image to \((x', y')\) coordinates in the right image (not the other way around).

Find a 2×2 transformation matrix that scales uniformly by a factor of 1.3. The right image’s (0, 0) point is at the bottom left corner. Also in all images, \(x\) points right and \(y\) points up.

Find a 2×2 transformation matrix that scales by a factor of 2 in only the \(x\) direction.

Find a 2×2 transformation matrix that skews or shears the image so the top row of pixels is shifted over by 1/4 of the image’s height.

Find a 2×2 transformation matrix that rotates the image counter-clockwise by 30 degrees.

Find a 3×3 transformation matrix that shifts the image up and to the right by 40 pixels.

Problems 6-8 are about the following two arrays of toy feature descriptors. The descriptors are 2-dimensional (much lower than we would usually use in practice); each column of the matrix is the descriptor for a feature. In all of the following, use matrix-style (i, j) indexing with indices starting at 1. For example, feature 4 in image 1 has descriptor \(\begin{bmatrix}3 & 1\end{bmatrix}^T\).

\[ F_1 = \begin{bmatrix} 0 & 1 & 4 & 3 \\ 1 & 0 & 4 & 1 \end{bmatrix} \]

\[ F_2 = \begin{bmatrix} 2 & 5 & 1\\ 1 & 5 & 2 \end{bmatrix} \]

Create a table with 4 rows and 3 columns in which the \((i,j)\)-th cell contains the SSD distance between feature \(i\) in image 1 and feature \(j\) in image 2.

For each feature in image 1, give the index of the closest feature match in image 2 using the SSD metric.

For each feature in image 2, give the index of the closest feature match in image 1 and the ratio distance between each feature and its closest match.

Suppose you’ve aligned two images using feature matches using a translational motion model; that is, you have a vector \(\mathbf{t} = \left[t_x, t_y\right]\) that specifies the offset of corresponding pixels in image 2 from their coordinates in image 1. We’d like to warp image 2 into image 1’s coordinates and combine the two together using some blending scheme (maybe we’ll average them or something).

Give a 3×3 affine transformation matrix that can be used to warp image 2 into image 1’s coordinates.

If image 1’s origin is at its top left and \(t_x\) and \(t_y\) are both positive, what’s the size of the destination image that can contain the combined image? Let \(h_1, w_1\) and \(h_2, w_2\) be the heights and widths of the two input images.

Write down the \(x\) and \(y\) residuals for a pair of corresponding points \((x, y)\) in image 1 and \((x', y')\) in image 2 under a homography (projective) motion model. Assume the homography matrix is parameterized as: \[ \begin{bmatrix} a & b & c\\ d & e & f\\ g & h & 1 \end{bmatrix} \]