Onto enemy terrain.

Capture all their food!

Enough of defense,

Onto enemy terrain.

Capture all their food!

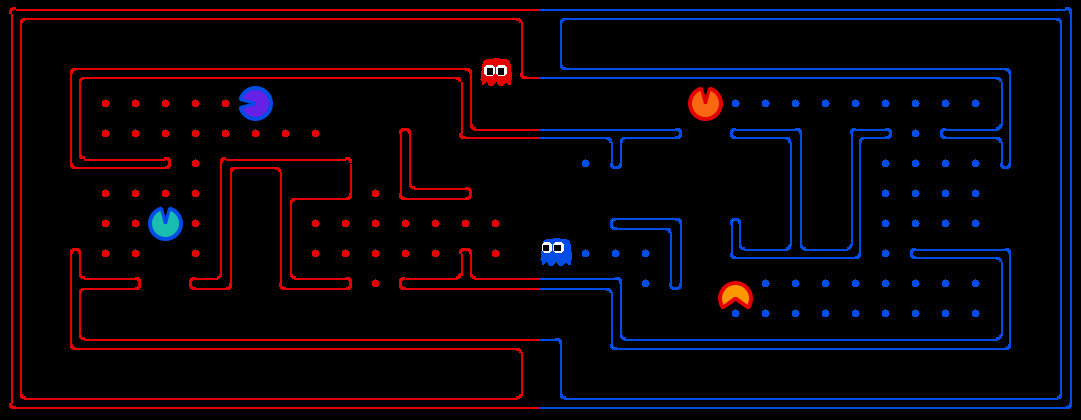

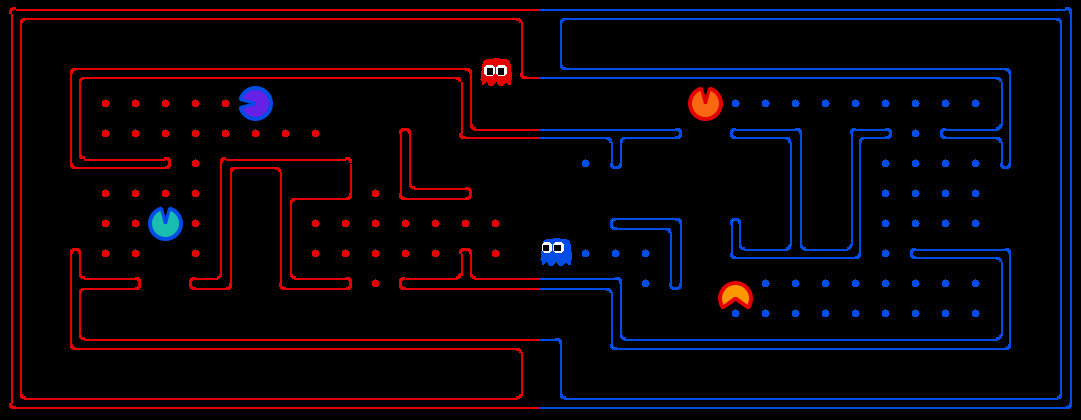

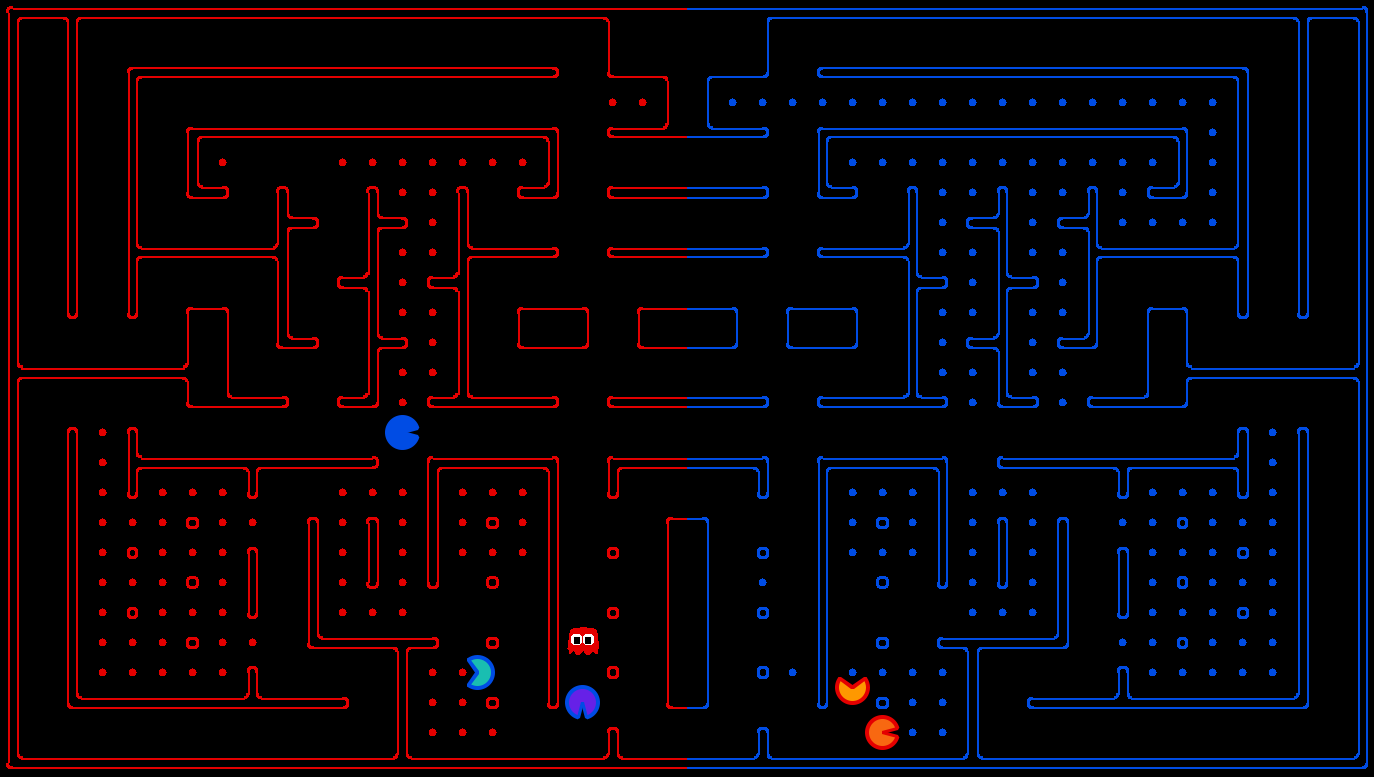

This project involves a multi-player capture-the-flag variant of Pacman, where agents control both Pacman and ghosts in coordinated team-based strategies. Your team will try to eat the food on the far side of the map, while defending the food on your home side. The code and the supporting documents are available as a zip archive.

| Key files to read: | |

capture.py |

The main file that runs games locally. This file also describes the new capture the flag GameState type and rules. |

captureAgents.py |

Specification and helper methods for capture agents. |

baselineTeam.py |

Example code that defines two very basic reflex agents, to help you get started. |

myTeam.py |

This is where you define your own agents for inclusion in the nightly tournament. (This is the only file that you submit.) |

| Supporting files (do not modify): | |

|---|---|

game.py |

The logic behind how the Pacman world works. This file describes several supporting types like AgentState, Agent, Direction, and Grid. |

util.py |

Useful data structures for implementing search algorithms. |

distanceCalculator.py |

Computes shortest paths between all maze positions. |

graphicsDisplay.py |

Graphics for Pacman |

graphicsUtils.py |

Support for Pacman graphics |

textDisplay.py |

ASCII graphics for Pacman |

keyboardAgents.py |

Keyboard interfaces to control Pacman |

layout.py |

Code for reading layout files and storing their contents |

Academic Dishonesty: While we won't grade contests, we still expect you not to falsely represent your work. Please don't let us down.

Scoring: When a Pacman eats a food dot, the food is permanently removed and one point is scored for that Pacman's team. Red team scores are positive, while Blue team scores are negative.

Eating Pacman: When a Pacman is eaten by an opposing ghost, the Pacman returns to its starting position (as a ghost). No points are awarded for eating an opponent.

Power capsules: If Pacman eats a power capsule, agents on the opposing team become "scared" for the next 40 moves, or until they are eaten and respawn, whichever comes sooner. Agents that are "scared" are susceptible while in the form of ghosts (i.e. while on their own team's side) to being eaten by Pacman. Specifically, if Pacman collides with a "scared" ghost, Pacman is unaffected and the ghost respawns at its starting position (no longer in the "scared" state).

Observations: Agents can only observe an opponent's configuration (position and direction) if they or their teammate is within 5 squares (Manhattan distance). In addition, an agent always gets a noisy distance reading for each agent on the board, which can be used to approximately locate unobserved opponents.

Winning: A game ends when one team eats all but two of the opponents' dots. Games are also limited to 1200 agent moves (300 moves per each of the four agents). If this move limit is reached, whichever team has eaten the most food wins. If the score is zero (i.e., tied) this is recorded as a tie game.

baselineTeam that the staff has provided:

python capture.py

A wealth of options are available to you:

python capture.py --helpThere are four slots for agents, where agents 0 and 2 are always on the red team, and 1 and 3 are on the blue team. Agents are created by agent factories (one for Red, one for Blue). See the section on designing agents for a description of the agents invoked above. The only team that we provide is the

baselineTeam. It is chosen by default as both the red and blue team, but as an example of how to choose teams:

python capture.py -r baselineTeam -b baselineTeamwhich specifies that the red team

-r and the blue team -b are both created from baselineTeam.py.

To control one of the four agents with the keyboard, pass the appropriate option:

python capture.py --keys0The arrow keys control your character, which will change from ghost to Pacman when crossing the center line.

defaultcapture layout. To test your agent on other layouts, use the -l option.

In particular, you can generate random layouts by specifying RANDOM[seed]. For example, -l RANDOM13 will use a map randomly generated with seed 13.

Baseline Team: To kickstart your agent design, we have provided you with a team of two baseline agents, defined in baselineTeam.py. They are both quite bad. The OffensiveReflexAgent moves toward the closest food on the opposing side. The DefensiveReflexAgent wanders around on its own side and tries to chase down invaders it happens to see.

File naming: For the purpose of testing or running games locally, you can define a team of agents in any arbitrarily-named python file. When submitting to the nightly tournament, however, you must define your agents in myTeam.py (and you must also create a name.txt file that specifies your team name).

Interface: The GameState in capture.py should look familiar, but contains new methods like getRedFood, which gets a grid of food on the red side (note that the grid is the size of the board, but is only true for cells on the red side with food). Also, note that you can list a team's indices with getRedTeamIndices, or test membership with isOnRedTeam.

Distance Calculation: To facilitate agent development, we provide code in distanceCalculator.py to supply shortest path maze distances.

To get started designing your own agent, we recommend subclassing the CaptureAgent class. This provides access to several convenience methods. Some useful methods are:

def getFood(self, gameState):

"""

Returns the food you're meant to eat. This is in the form

of a matrix where m[x][y]=true if there is food you can

eat (based on your team) in that square.

"""

def getFoodYouAreDefending(self, gameState):

"""

Returns the food you're meant to protect (i.e., that your

opponent is supposed to eat). This is in the form of a

matrix where m[x][y]=true if there is food at (x,y) that

your opponent can eat.

"""

def getOpponents(self, gameState):

"""

Returns agent indices of your opponents. This is the list

of the numbers of the agents (e.g., red might be "1,3,5")

"""

def getTeam(self, gameState):

"""

Returns agent indices of your team. This is the list of

the numbers of the agents (e.g., red might be "1,3,5")

"""

def getScore(self, gameState):

"""

Returns how much you are beating the other team by in the

form of a number that is the difference between your score

and the opponents score. This number is negative if you're

losing.

"""

def getMazeDistance(self, pos1, pos2):

"""

Returns the distance between two points; These are calculated using the provided

distancer object.

If distancer.getMazeDistances() has been called, then maze distances are available.

Otherwise, this just returns Manhattan distance.

"""

def getPreviousObservation(self):

"""

Returns the GameState object corresponding to the last

state this agent saw (the observed state of the game last

time this agent moved - this may not include all of your

opponent's agent locations exactly).

"""

def getCurrentObservation(self):

"""

Returns the GameState object corresponding this agent's

current observation (the observed state of the game - this

may not include all of your opponent's agent locations

exactly).

"""

def debugDraw(self, cells, color, clear=False):

"""

Draws a colored box on each of the cells you specify. If clear is True,

will clear all old drawings before drawing on the specified cells.

This is useful for debugging the locations that your code works with.

color: list of RGB values between 0 and 1 (i.e. [1,0,0] for red)

cells: list of game positions to draw on (i.e. [(20,5), (3,22)])

"""

Have fun! Please bring our attention to any problems you discover.